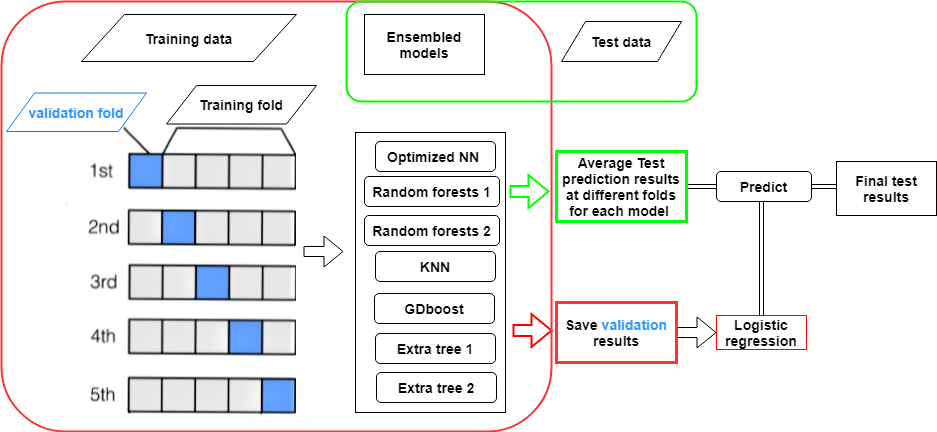

Ensemble models for classification (combine deep learning with machine learning)

Introduction:

Ensemble modeling is a process where multiple diverse models are created to predict an outcome, either by using many different modeling algorithms or using different training data sets. The ensemble model then aggregates the prediction of each base model and results in once final prediction for the unseen data. The motivation for using ensemble models is to reduce the generalization error of the prediction. As long as the base models are diverse and independent, the prediction error of the model decreases when the ensemble approach is used.

The approach seeks the wisdom of crowds in making a prediction. Even though the ensemble model has multiple base models within the model, it acts and performs as a single model.

Why Ensemble modeling?

There are two major benefits of Ensemble models:

1 Better prediction

2 More stable model

The aggregate opinion of a multiple models is less noisy than other models. In finance, it was called “Diversification”, a mixed portfolio of many stocks will be much less variable than just one of the stocks alone.

This is also why your models will be better with ensemble of models rather than individual. One of the caution with ensemble models are over fitting although bagging takes care of it largely.

Project link: https://github.com/tankwin08/ensemble-models-ML-DL-